⇒ Cloud Storage Concepts

Cloud Storage is Google's Object Storage

Cloud Storage is not the same as file storage in which one manages data as a hierarchy of folders

Cloud Storage is not the same as block storage in which the operating system manages data as chunks of disk

Object Storage allows one to store arbitrary bunch of bytes and let you address it with a unique key. Often these unique keys are in the form of URLs which means object storage interacts nicely with Web technologies

Cloud Storage is not a file system because each of your objects has a URL

Cloud Storage is a fully managed scalable service that stores objects with high durability and high availability

Use Cloud Storage for lots of things: serving website content, storing data for archival and disaster recovery, or distributing large data objects to end users via direct download

Cloud Storage is comprised of Buckets you create and configure and use to hold your storage objects

Buckets have a globally unique name. One has to specify a geographic location where the Bucket and its contents will be stored and choose a default storage class

The storage objects are immutable which means that you do not edit them in place but instead you create new versions

Cloud Storage always encrypts your data on the server side before it is written to disk and you don't pay extra for that. Also by default, data in-transit is encrypted using HTTPS

Use of Cloud IAM to control access to your objects and Buckets is sufficient. Roles are inherited from Project to Bucket to Object. If you need finer control, you can create Access Control Lists (ACLs). ACLs define who has access to your Buckets and Objects as well as what level of access they have. Each ACL consists of two pieces of information, a scope which defines who can perform the specified actions, for example, a specific user or group of users and a permission which defines what actions can be performed. For example, read or write

Remember Cloud Storage objects are immutable. One can turn on object Versioning on Buckets if desired. If enabled, Cloud Storage keeps a history of modifications

One can list the archived versions of an object, restore an object to an older state or permanently delete a version as needed

If object Versioning is disabled, new always overrides old

Cloud Storage also offers lifecycle management policies. For example, one could tell Cloud Storage to delete objects older than 365 days. Or delete objects created before January 1, 2013 or keep only the three most recent versions of each object in a bucket that has versioning enabled

Cloud Storage lets you choose among four different types of storage classes: Multi-Regional, Regional, Nearline, and Coldline

Multi-regional and Regional are high-performance object storage

Nearline and Coldline are backup and archival storage

All of the storage classes are accessed in comparable ways using the cloud storage API and they all offer millisecond access times

Multi-regional storage cost a bit more but it's Geo-redundant. That means you pick a broad geographical location like the United States, the European Union, or Asia and cloud storage stores your data in at least two geographic locations separated by at least 160 kilometers

Regional storage lets you store data in a specific GCP region: US Central one, Europe West one or Asia East one. It's cheaper than Multi-regional storage but it offers less redundancy

Multi-regional storage is appropriate for storing frequently accessed data. For example, website content, interactive workloads, or data that's part of mobile and gaming applications

Regional storage is used for storing data close to the Compute Engine, virtual machines, or the Kubernetes Engine clusters. That gives better performance for data-intensive computations

Nearline storage is a low-cost, highly durable service for storing infrequently accessed data. The storage class is a better choice for scenarios where you plan to read or modify your data once a month or less on average. For example, if you want to continuously add files to cloud storage and plan to access those files once a month for analysis

Coldline storage is a very low cost, highly durable service for data archiving, online backup, and disaster recovery. Coldline storage is the best choice for data that you plan to access at most - once a year. This is due to its slightly lower availability, 90-day minimum storage duration, costs for data access, and higher per operation costs. For example, if you want to archive data or have access to it in case of a disaster recovery event

Availability of these storage classes varies with Multi-regional having the highest availability of 99.95 percent followed by Regional with 99.9 percent and Nearline and Coldline with 99 percent

As for pricing, all storage classes incur a cost per gigabyte of data stored per month, with Multi-regional having the highest storage price and Coldline the lowest storage price. Egress and data transfer charges may also apply. In addition to those charges, Nearline storage also incurs an access fee per gigabyte of data read and Coldline storage incurs a higher fee per gigabyte of data read

Nearline and Coldline storage classes have lower storage fees but incur additional costs for data retrieval

To move data, use gsutil cloud storage command from this cloud SDK. You can also move data in with a drag and drop in the GCP console, if you use the Google Chrome browser

Google Cloud platform offers the online storage transfer service and the offline Transfer Appliance to help. The storage transfer service lets you schedule and manage batch transfers to cloud storage from another cloud provider from a different cloud storage region or from an HTTPS endpoint

The Transfer Appliance is a rackable, high-capacity storage server that you lease from Google Cloud. You simply connect it to your network, load it with data, and then ship it to an upload facility where the data is uploaded to cloud storage. This service enables you to securely transfer up to a petabyte of data on a single appliance

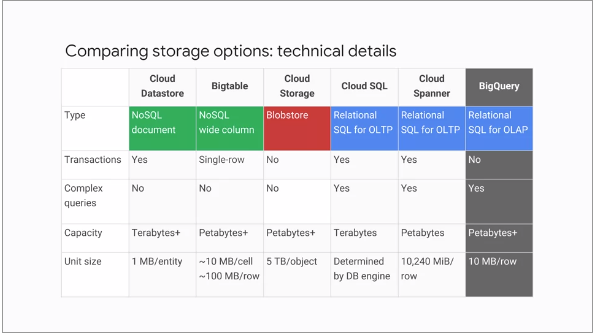

Consider using Cloud Storage if you need to store immutable blobs larger than 10 megabytes such as large images or movies. This storage service provides petabytes of capacity with a maximum unit size of five terabytes per object