⇒ Stackdriver Concepts

Stackdriver is GCP's tool for monitoring, logging and diagnostics

Stackdriver gives one access to many different kinds of signals from the virtual machines, containers, middleware and application tier, logs, metrics and traces. It gives one insight into the application's health, performance and availability

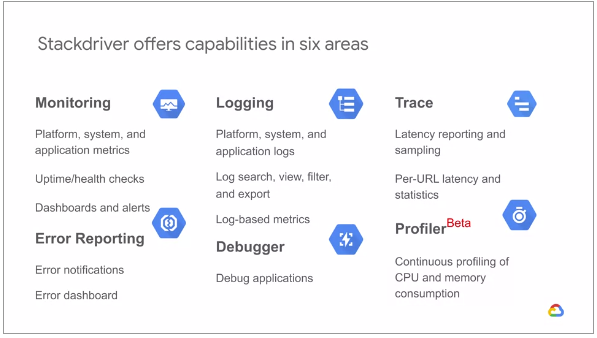

The core components of Stackdriver are: Monitoring, Logging, Trace, Error Reporting and Debugging

Stackdriver Monitoring checks the endpoints of web applications and other Internet accessible services running on the cloud environment. One can configure uptime checks associated with URLs, groups or resources such as Instances and load balancers. One can set up alerts on interesting criteria, like when health check results or uptimes fall into levels that need action. One can use Monitoring with a lot of popular notification tools. And one can create dashboards to help visualize the state of the application

Stackdriver Logging lets one view logs from the applications as well as filter and search on them. Logging also lets one define metrics, based on log contents that are incorporated into dashboards and alerts. One can also export logs to BigQuery, Cloud Storage and Cloud PubSub

Stackdriver Error Reporting tracks and groups the errors in the cloud applications. And it notifies the user when new errors are detected

With Stackdriver Trace, one can sample the latency of app engine applications and report per-URL statistics

Stackdriver Debugger connects the applications production data to the source code. So one can inspect the state of the application at any code location in production. That means one can view the application stage without adding logging statements

Stackdriver Debugger works best when the application source code is available, such as in Cloud Source repositories. Although it can be in other repositories too

The following illustrates the various capabilities of Stackdriver: